VideoDB Retriever#

RAG: Instantly Search and Stream Video Results 📺#

VideoDB is a serverless database designed to streamline the storage, search, editing, and streaming of video content. VideoDB offers random access to sequential video data by building indexes and developing interfaces for querying and browsing video content. Learn more at docs.videodb.io.

Constructing a RAG pipeline for text is relatively straightforward, thanks to the tools developed for parsing, indexing, and retrieving text data. However, adapting RAG models for video content presents a greater challenge. Videos combine visual, auditory, and textual elements, requiring more processing power and sophisticated video pipelines.

While Large Language Models (LLMs) excel with text, they fall short in helping you consume or create video clips. VideoDB provides a sophisticated database abstraction for your MP4 files, enabling the use of LLMs on your video data. With VideoDB, you can not only analyze but also instantly watch video streams of your search results.

In this notebook, we introduce VideoDBRetriever, a tool specifically designed to simplify the creation of RAG pipelines for video content, without any hassle of dealing with complex video infrastructure.

🛠️️ Setup connection#

Requirements#

To connect to VideoDB, simply get the API key and create a connection. This can be done by setting the VIDEO_DB_API_KEY environment variable. You can get it from 👉🏼 VideoDB Console. ( Free for first 50 uploads, No credit card required! )

Get your OPENAI_API_KEY from OpenAI platform for llama_index response synthesizer.

import os

os.environ["OPENAI_API_KEY"] = ""

os.environ["VIDEO_DB_API_KEY"] = ""

Installing Dependencies#

To get started, we’ll need to install the following packages:

llama-indexllama-index-retrievers-videodbvideodb

%pip install llama-index

%pip install videodb

%pip install llama-index-retrievers-videodb

Data Ingestion#

Let’s upload a few video files first. You can use any public url, Youtube link or local file on your system. First 50 uploads are free!

from videodb import connect

# connect to VideoDB

conn = connect()

# upload videos to default collection in VideoDB

print("uploading first video")

video1 = conn.upload(url="https://www.youtube.com/watch?v=lsODSDmY4CY")

print("uploading second video")

video2 = conn.upload(url="https://www.youtube.com/watch?v=vZ4kOr38JhY")

coll = conn.get_collection(): Returns default collection object.

coll.get_videos(): Returns list of all the videos in a collections.

coll.get_video(video_id): Returns Video object from givenvideo_id.

Indexing#

To search bits inside a video, you have to index the video first. We have two types of indexing possible for a video.

index_spoken_words: Indexes spoken words in the video.index_scenes: Indexes visuals of the video.(Note: This feature is currently available only for beta users, join our discord for early access)https://discord.gg/py9P639jGz

print("Indexing the videos...")

video1.index_spoken_words()

video2.index_spoken_words()

Indexing the videos...

100%|████████████████████████████████████████████████████████████████████████████████████████████████████| 100/100 [00:39<00:00, 2.56it/s]

100%|████████████████████████████████████████████████████████████████████████████████████████████████████| 100/100 [00:39<00:00, 2.51it/s]

Querying#

Now that the videos are indexed, we can use VideoDBRetriever to fetch relevant nodes from VideoDB.

from llama_index.retrievers.videodb import VideoDBRetriever

from llama_index.core import get_response_synthesizer

from llama_index.core.query_engine import RetrieverQueryEngine

# VideoDBRetriever by default uses the default collection in the VideoDB

retriever = VideoDBRetriever()

# use your llama_index response_synthesizer on search results.

response_synthesizer = get_response_synthesizer()

query_engine = RetrieverQueryEngine(

retriever=retriever,

response_synthesizer=response_synthesizer,

)

# query across all uploaded videos to get the text answer.

response = query_engine.query("What is Dopamine?")

print(response)

Dopamine is a neurotransmitter that plays a key role in various brain functions, including motivation, reward, and pleasure. It is involved in regulating mood, movement, and cognitive function.

response = query_engine.query("What's the benefit of morning sunlight?")

print(response)

Morning sunlight can help trigger a cortisol pulse shift, allowing individuals to capture a morning work block by waking up early and exposing themselves to sunlight. This exposure to morning sunlight, along with brief high-intensity exercise, can assist in adjusting the cortisol levels and potentially enhancing productivity during the early hours of the day.

Watch Video Stream of Search Result#

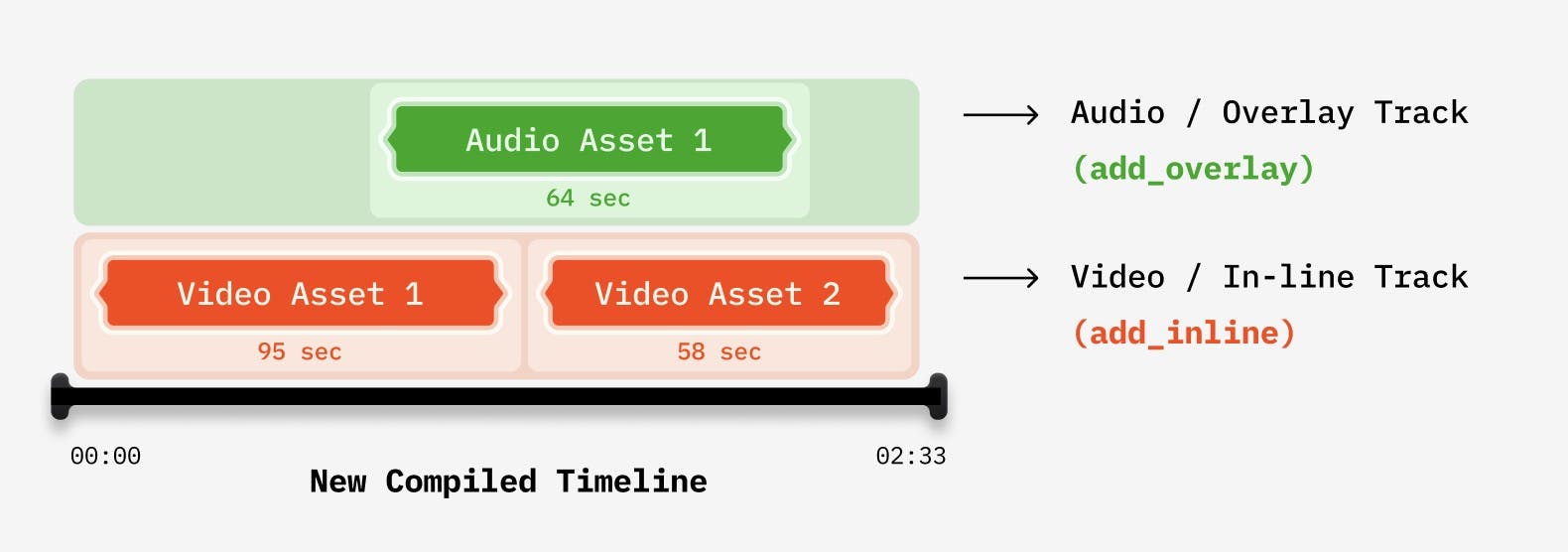

Although, The Nodes returned by Retriever are of type TextNode. They also have metadata that can help you watch the video stream of results. You can create a compilation of all Nodes using VideoDB’s Programmable video streams. You can even modify it with Audio and Image overlays easily.

from videodb import connect, play_stream

from videodb.timeline import Timeline

from videodb.asset import VideoAsset

# create video stream of search results

conn = connect()

timeline = Timeline(conn)

relevant_nodes = retriever.retrieve("What's the benefit of morning sunlight?")

for node_obj in relevant_nodes:

node = node_obj.node

# create a video asset for each node

node_asset = VideoAsset(

asset_id=node.metadata["video_id"],

start=node.metadata["start"],

end=node.metadata["end"],

)

# add the asset to timeline

timeline.add_inline(node_asset)

# generate stream for the compiled timeline

stream_url = timeline.generate_stream()

play_stream(stream_url)

'https://console.videodb.io/player?url=https://stream.videodb.io/v3/published/manifests/9c39c8a9-62a2-4b5e-b15d-8565cc58c8ae.m3u8'

Configuring VideoDBRetriever#

1. Retriever for only one Video:

You can pass the id of the video object to search in only that video.

VideoDBRetriever(video="my_video.id")

2. Retriever for different type of Indexes:

# VideoDBRetriever that uses keyword search - Matches exact occurence of words and sentences. It only supports single video.

keyword_retriever = VideoDBRetriever(search_type="keyword", video="my_video.id")

# VideoDBRetriever that uses semantic search - Perfect for question answers type of query.

semantic_retriever = VideoDBRetriever(search_type="semantic")

# [only for beta users of VideoDB] VideoDBRetriever that uses scene search - Search visual information in the videos.

visual_retriever = VideoDBRetriever(search_type="scene")

3. Configure threshold parameters:

result_threshold: is the threshold for number of results returned by retriever; the default value is5score_threshold: only nodes with score higher thanscore_thresholdwill be returned by retriever; the default value is0.2

custom_retriever = VideoDBRetriever(result_threshold=2, score_threshold=0.5)

View Specific Node#

To watch stream of each retrieved node, you can directly generate the stream of that part directly from video object of VideoDB.

relevant_nodes

[NodeWithScore(node=TextNode(id_='6ca84002-49df-4091-901d-48248dbe0977', embedding=None, metadata={'collection_id': 'c-33978c87-33e6-4259-9e27-a9edc79be9ad', 'video_id': 'm-f201ff7c-88ec-47ca-938b-a4e968676ba0', 'length': '1496.711837', 'title': 'AMA #1: Leveraging Ultradian Cycles, How to Protect Your Brain, Seed Oils Examined and More', 'start': 906.01, 'end': 974.59}, excluded_embed_metadata_keys=[], excluded_llm_metadata_keys=[], relationships={}, text=" So for somebody that wants to learn an immense amount of material, or who has the opportunity to capture another Altradian cycle, the other time where that tends to occur is also early days. So some people, by waking up early and using stimulants like caffeine and hydration or some brief high intensity city exercise, can trigger that cortisol pulse to shift a little bit earlier so that they can capture a morning work block that occurs somewhere, let's say between six and 07:30 a.m. So let's think about our typical person, at least in my example, that's waking up around 07:00 a.m. And then I said, has their first Altradian work cycle really flip on? Because that bump in cortisol around 930 or 10:00 a.m. If that person were, say, to. Set their alarm clock for 05:30 a.m. Then get up, get some artificial light. If the sun isn't out, turn on bright artificial lights. Or if the sun happens to be up that time of year, get some sunlight in your eyes. But irrespective of sunlight, were to get a little bit of brief, high intensity exercise, maybe ten or 15 minutes of skipping rope or even just jumping jacks or go out for a brief jog.", start_char_idx=None, end_char_idx=None, text_template='{metadata_str}\n\n{content}', metadata_template='{key}: {value}', metadata_seperator='\n'), score=0.440981567),

NodeWithScore(node=TextNode(id_='2244fd64-121e-4699-ba36-f0f6a110750f', embedding=None, metadata={'collection_id': 'c-33978c87-33e6-4259-9e27-a9edc79be9ad', 'video_id': 'm-eae54005-b5ca-44f1-9c31-fcdb2f1db56a', 'length': '1830.498685', 'title': 'AMA #2: Improve Sleep, Reduce Sugar Cravings, Optimal Protein Intake, Stretching Frequency & More', 'start': 899.772, 'end': 977.986}, excluded_embed_metadata_keys=[], excluded_llm_metadata_keys=[], relationships={}, text=" Because the study, as far as I know, has not been done. Whether or not doing resistance training or some other type of exercise would have led to the same effect. Although I have to imagine that if it's moderately intense to intense resistance training, provided it's done far enough away from going to sleep right prior to 6 hours before sleep, that one ought to see the same effects, although that was not a condition in this study. But it's a very nice study. They looked at everything from changes in core body temperature to caloric expenditure. They didn't see huge changes in core body temperature changes, so that couldn't explain the effect. It really appears that the major effect of improving slow wave sleep was due to something in changing the fine structure of the brainwaves that occur during slow wave sleep. In fact, and this is an important point. The subjects in this study did not report subjectively feeling that much better from their sleep. So you might say, well then, why would I even want to bother? However, it's well known that getting sufficient slow wave sleep is important not just for repair, excuse me, for repair of bodily tissues, but also for repair of brain tissues and repair and washout of debris in the brain. And that debris is known to lead to things like dementia.", start_char_idx=None, end_char_idx=None, text_template='{metadata_str}\n\n{content}', metadata_template='{key}: {value}', metadata_seperator='\n'), score=0.282342136)]

from videodb import connect

# retriever = VideoDBRetriever()

# relevant_nodes = retriever.retrieve("What is Dopamine?")

video_node = relevant_nodes[0].node

conn = connect()

coll = conn.get_collection()

video = coll.get_video(video_node.metadata["video_id"])

start = video_node.metadata["start"]

end = video_node.metadata["end"]

stream_url = video.generate_stream(timeline=[(start, end)])

play_stream(stream_url)

'https://console.videodb.io/player?url=https://stream.videodb.io/v3/published/manifests/b7201145-7302-4ec5-b87c-d1a4c6592f69.m3u8'

🧹 Cleanup#

video1.delete()

video2.delete()